Abstract

In this project, we have developed convolutional neural networks (CNN) for a facial expression recognition task. The goal is to classify each facial image into one of the seven facial emotion categories considered in this study. We trained CNN models with different depth using gray-scale images from the Kaggle website [1]. We developed our models in Torch [2] and exploited Graphics Processing Unit (GPU) computation in order to expedite the training process. In addition to the networks performing based on raw pixel data, we employed a hybrid feature strategy by which we trained a novel CNN model with the combination of raw pixel data and Histogram of Oriented Gradients (HOG) features [3]. To reduce the overfitting of the models, we utilized different techniques including dropout and batch normalization in addition to L2 regularization. We applied cross validation to determine the optimal hyper-parameters and evaluated the performance of the developed models by looking at their training histories. We also present the visualization of different layers of a network to show what features of a face can be learned by CNN models.

Introduction

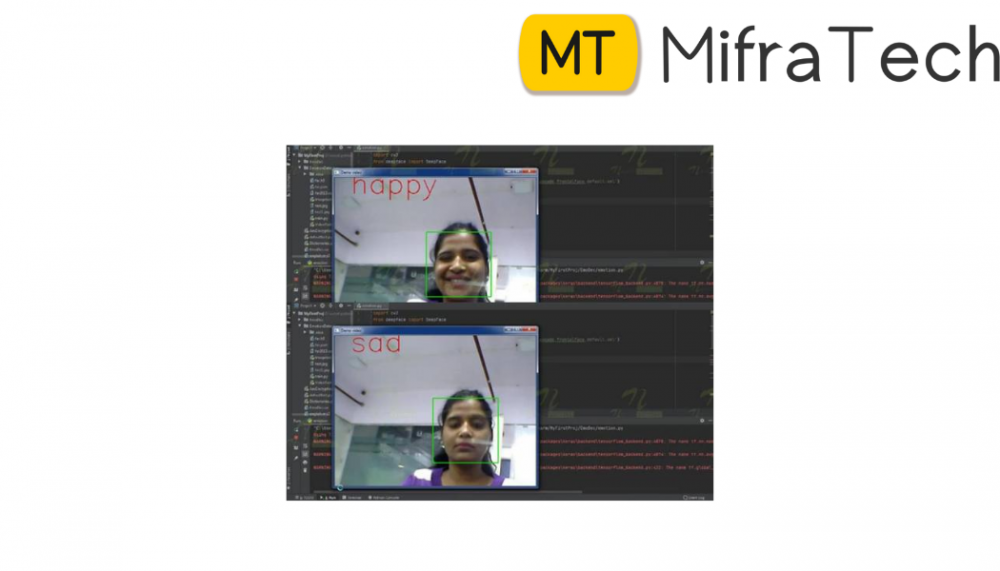

Humans interact with each other mainly through speech, but also through body gestures, to emphasize certain parts of their speech and to display emotions. One of the important ways humans display emotions is through facial expressions which are a very important part of communication. Though nothing is said verbally, there is much to be understood about the messages we send and receive through the use of nonverbal communication. Facial expressions convey nonverbal cues, and they play an important role in interpersonal relations [4, 5]. Automatic recognition of facial expressions can be an important component of natural human-machine interfaces; it may also be used in behavioral science and in clinical practice. Although humans recognize facial expressions virtually without effort or delay, reliable expression recognition by machine is still a challenge. There have been several advances in the past few years in terms of face detection, feature extraction mechanisms and the techniques used for expression classification, but development of an automated system that accomplishes this task is difficult [6]. In this paper, we present an approach based on Convolutional Neural Networks (CNN) for facial expression recognition. The input into our system is an image; then, we use CNN to predict the facial expression label which should be one these labels: anger, happiness, fear, sadness, disgust and neutral.

Methods

We developed CNNs with variable depths to evaluate the performance of these models for facial expression recognition. We considered the following network architecture in our investigation: [Conv-(SBN)-ReLU-(Dropout)-(Max-pool)]M - [Affine-(BN)-ReLU-(Dropout)]N - Affine - Softmax. The first part of the network refers to M convolutional layers that can possess spatial batch normalization (SBN), dropout, and max-pooling in addition to the convlution layer and ReLU nonlinearity, which always exists in these layers. After M convolution layers, the network is led to N fully connected layers that always have Affine operation and ReLU nonlinearity, and can include batch normalization (BN) and dropout. Finally, the network is followed by the affine layer that computes the scores and softmax loss function. The developed model gives the user the freedom to decide about the number of convlutional and fully connected layers, as well as the existance of batch normalization, dropout and max-pooling layers. Along with dropout and batch normalization techniques, we included L2 regularization in our implementation. Furthermore, the number of filters, strides, and zero-padding can be specified by user, and if they are not given, the default values are considered. As we will describe in the next section, we proposed the idea of combining HOG features with those extracted by convolutional layers by mean of raw pixel data. To this end, we utilized the same architecture described above, but with this difference that we added the HOG features to those exiting the last convolution layer. The hybrid feature set then enters the fully connected layers for score and loss calculation. Examples of seven facial emotions that we consider in this classification problem. (a) angry, (b) neutral, (c) sad, (d) happy, (e) surprise, (f) fear, (g) disgust We implemented the aforementioned model in Torch and took advantage of GPU accelerated deep learning features to make the model training process faster. 4.

Dataset and Features

In this project, we used a dataset provided by Kaggle website, which consists of about 37,000 wellstructured 48 × 48 pixel gray-scale images of faces. The images are processed in such a way that the faces are almost centered and each face occupies about the same amount of space in each image. Each image has to be categorized into one of the seven classes that express different facial emotions. These facial emotions have been categorized as: 0=Angry, 1=Disgust, 2=Fear, 3=Happy, 4=Sad, 5=Surprise, and 6=Neutral. Figure 1 depicts one example for each facial expression category. In addition to the image class number (a number between 0 and 6), the given images are divided into three different sets which are training, validation, and test sets. There are about 29,000 training images, 4,000 validation images, and 4,000 images for testing. After reading the raw pixel data, we normalized them by subtracting the mean of the training images from each image including those in the validation and test sets. For the purpose of data augmentation, we produced mirrored images by flipping images in the training set horizontally. In order to classify the expressions, mainly we used the features generated by convolution layers using the raw pixel data. As an extra exploration, we developed learning models that concatenate the HOG features with those generated by convolutional layers and give them as input features into Fully Connected (FC) layers.

Conclusion

We developed various CNNs for a facial expression recognition problem and evaluated their performances using different post-processing and visualization techniques.The results demonstrated that deep CNNs are capable of learning facial characteristics and improving facial emotion detection. Also, the hybrid feature sets did not help in improving the model accuracy, which means that the convolutional networks can intrinsically learn the key facial features by using only raw pixel data.

best cse final year projects

best cse projects

best cse engineering projects

best projects for cse engineering students

best project for computer science engineering

what are the best projects for cse btech

what are the projects for cse

top 10 projects for cse students

best iot projects for cse students

best cse projects for final year

good projects for computer science engineering students

best projects for cse students

best projects for computer engineering students

best projects for computer science students

best domain for cse final year projects

best final year projects for cse 2020

best projects for cse final year students

best btech cse final year projects

best cse projects

best cse project ideas

best cse project topics

best domain for cse project

which domain is best for doing project in cse

best btech projects for cse

best final year projects for cse

Mifratech websites : https://www.mifratech.com/public/

Mifratech facebook : https://www.facebook.com/mifratech.lab

mifratech instagram : https://www.instagram.com/mifratech/

mifratech twitter account : https://twitter.com/mifratech